07. Sequence to sequence in TensorFlow

Sequence to sequence in Tensorflow

Tensorflow has a bunch of APIs that help you build a sequence to sequence model (also called a seq2seq model for short). It’s important to note that these APIs have changed at the end of 2016 (and in a way are still evolving). A lot of the tutorials you’ll find on the web for seq2seq in tensorflow use the now-deprecated tf.contrib.legacy_seq2seq (which was previously called “tf.nn.seq2seq”).

The modules of note for seq2seq are:

- tf.nn, which allows us to construct different kinds of RNNs

- tf.contrib.rnn, which defines a number of RNN cells (an RNN cell is a required parameter for the RNNs defined in tf.nn).

- tf.contrib.seq2seq, which contains seq2seq decoders and loss operations.

The Main Components

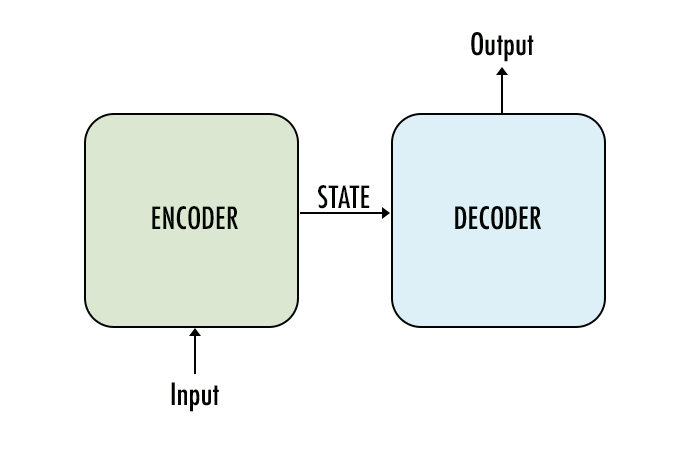

If we take a high-level view, a seq2seq model has these main components:

- Encoder: this is a tf.nn.dynamic_rnn.

- Decoder: this is a tf.contrib.seq2seq.dynamic_rnn_decoder

tf.nn.dynamic_rnn

QUESTION:

Read the documentation of tf.nn.dynamic_rnn. What inputs will we pass into this function?

ANSWER:

Reading the documentation for tf.nn.dynamic_rnn, you'll see

tf.nn.dynamic_rnn(cell, inputs, sequence_length=None, initial_state=None, dtype=None, parallel_iterations=None, swap_memory=False, time_major=False, scope=None)

So you need to at least pass in the RNN cell you built (for example tf.contrib.rnn.BasicLSTMCell). You'll also need to give it the inputs tensor, which in this case is the input text data, typically coming from the embedding layer. I also typically pass in an initial_state which you've seen in the previous RNN lessons.

seq2seq module

Read https://www.tensorflow.org/api_docs/python/tf/contrib/seq2seq to understand its major component. You can ignore everything with “attention” for the time being.